Building an LLM Chatbot for Car Buyers

Using Generative AI to help online car buyers find exactly what matters most to them

Using Generative AI to help online car buyers find exactly what matters most to them

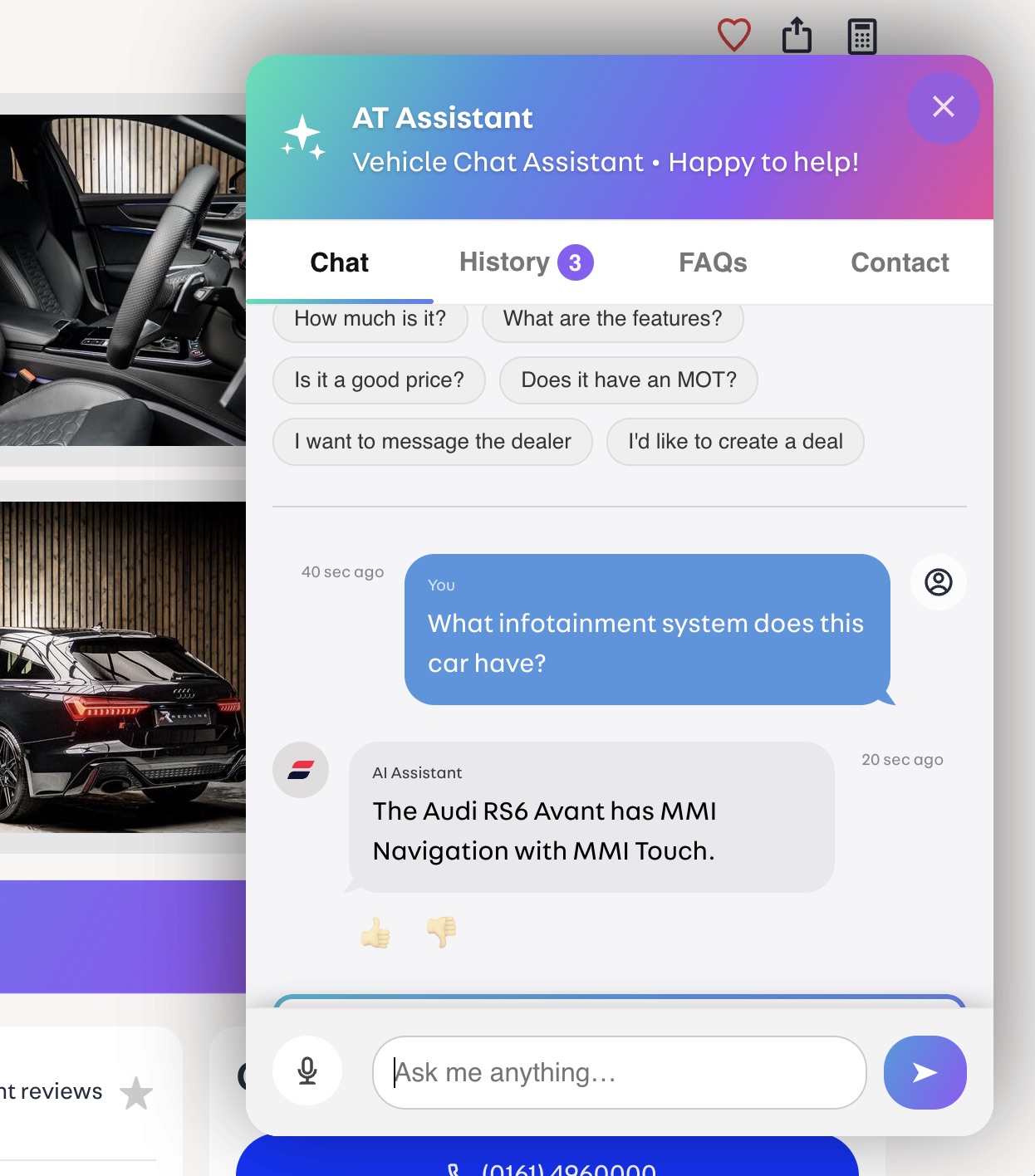

Using GCP, Vertex AI, and Gemini 2.0 Flash, I built a chatbot that reads car advert data from AutoTrader's marketplace and answers user questions about specific vehicles. The chatbot uses guardrails to stay polite, accurate, and on topic while refusing to make up information it does not have. The main takeaway is that while LLMs offer real value for personalising user experiences, the risk of incorrect or offensive outputs means businesses must carefully weigh innovation against brand reputation.

Buying a car online is a challenge. There are pages full of information, technical jargon, and details that force everyday buyers to learn a lot before making what is often their second largest purchase. So the question became: can we remove this friction and help users quickly find the information that matters most to them?

Goal: Build a chatbot using an LLM, that reads advert data from an online car marketplace and ensures the AI is safe and reliable to use.

A chatbot like this could transform pages of dense information into a personalised conversation that speaks directly to each user. However, the risk of the LLM producing incorrect or offensive content is a concern, and businesses must balance the value of innovation against potential damage to their brand.

I chose Gemini 2.0 Flash from Vertex AI's Model Garden because I needed a model that was both cost effective and quick to deploy for a hackathon. This was not a production release, so speed and experimentation were our main priorities.

The chatbot successfully transformed dense advert pages into personalised conversations, letting users quickly find specific details like infotainment systems or finance options without having to read the entire advert.

LLMs are a challenge to wrangle. Even with clear instructions, the model would sometimes miss guidelines after longer or difficult conversations. It took a lot of trial and error to get consistent behaviour.

I can see why businesses often outsource their LLM solutions. If something goes wrong, the blame can be shifted to the provider, and the service can be swapped out before serious brand damage occurs.

Recommendation: Whether building an LLM chatbot makes sense depends on how a business positions itself. While it offers an innovative way to personalise user experiences, the production costs and brand risks of building in house are significant and need careful consideration. This would only be recommended if the business could provide adequate support and monitoring to manage the LLM's behaviour over time.

This project taught me a great deal about building endpoints, working with Vertex AI's Model Garden, and crafting effective system instructions for LLMs.

If I had more time, I would look to build and train a model instead of using a pre-trained version. I would also build a more robust backend instead of relying on just the GCP endpoint. I would also look to add monitoring and sentiment analysis to spot negative conversations and use those insights to improve the instructions. In future projects, I will have a much better starting point and will not need as much trial and error to get the LLM behaving as expected.